Why AI governance is a key priority for financial institutions

MAS recently released its guidelines on AI risk management.

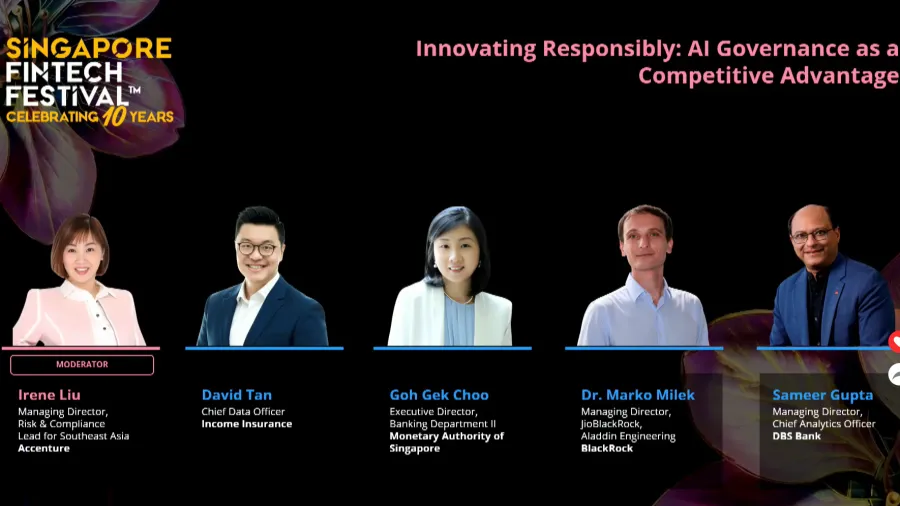

AI governance must be an integral, evolving part of every financial institution’s strategy, panellists at the Innovating Responsibly: AI Governance as a Competitive Advantage during Day 3 of the Singapore Fintech Festival said.

Just recently, the Monetary Authority of Singapore (MAS) issued a consultation paper proposing guidelines on AI Risk Management for all financial institutions (FIs), outlining supervisory expectations for the use of artificial intelligence.

Goh Gek Choo, executive director of the Banking Department II at MAS, said the guidelines were built on past work done over the years, adding that industry players had been seeking greater regulatory certainty and clarity around supervisory expectations as AI adoption scales. She noted that strong governance is becoming essential to support safe deployment, and that “if you do this well, you get the comfort, then you can make the progress.”

Goh said there were two areas where MAS sought balance. The first was ensuring sufficient clarity and flexibility.

“The clarity will allow the guidelines to be actionable. The guidelines have moved beyond the FEAT principles from the why to the how. The guidelines also need to be flexible to accommodate financial institutions of different sizes and risk profiles so they can implement the guidelines on a proportionate basis,” Goh said.

Goh added that MAS had to strike a careful balance when drafting the new guidelines. The aim was to develop principles-based rules that provide clarity without becoming overly prescriptive, so that the framework supports innovation rather than getting ahead of it.

She noted that a second balance was to address current AI-related risks while preparing for those that may emerge as technologies evolve. Although the guidelines are anchored on MAS’s existing supervisory expectations—covering oversight, risk management, policies and frameworks, lifecycle controls, and capability—they also highlight additional considerations specific to AI deployment.

Goh pointed to future risks arising from developments such as AI agents, which could introduce new vulnerabilities if given greater autonomy within an institution’s systems, including the potential for malicious prompts or unauthorised data access. She added that the guidelines are designed to apply broadly across all AI technologies and applications, and encouraged industry feedback as MAS refines its approach.

Sameer Gupta, managing director and chief analytics officer at DBS Bank, welcomed the release of the guidelines.

“On AI, from a DBS standpoint, we declare our value from AI. This year, we are on track to deliver nearly $1b value from AI,” Gupta said.

Gupta explained that the bank measures this value through extensive A/B testing, an approach it has used for several years to assess the impact of AI-driven interventions. Gupta said AI is now “core and principal” to DBS’s operations rather than a side initiative, making strong governance essential to scaling its use safely.

“The analogy that I will give is, for a car to go fast, the other thing that it needs, other than the engine, is brakes. If you don’t have brakes, even a car with the best engine can’t go fast. The AI governance is like that. When it’s done right, and it allows us to balance the speed and innovation, it keeps us grounded on things which are material and focused,” Gupta said.

David Tan, chief data officer at Income Insurance, said the growing use of embedded AI within both core and traditional technology systems has made governance more complex. He noted the importance of grounding the organisation’s knowledge base to ensure that “whatever non-deterministic outcomes we get out of the AI systems, we want to make sure it is contextually relevant to what we desire as an organisation.”

With rapid technological change making it difficult to manage uncertainty, Tan said the focus is on reducing ambiguity through industry collaboration that clarifies principles, risks, and shared methodologies. “What we can do is limit ambiguity,” he added, with institution-specific processes built on top of that foundation as technology evolves.

Marko Milek, Marko Milek, managing director, JioBlackRock, Aladdin Engineering at BlackRock, said the handbook has been valuable in reinforcing and aligning the firm’s existing governance practices.

He noted that BlackRock has long applied AI across its business, from traditional models to generative AI, and embeds governance as a horizontal layer across all functions. While much of the firm’s technology, vendor and data controls already addressed many AI risks, Milek said newer challenges—such as hallucinations in generative models—required additional safeguards.

“We fundamentally believe we’re still at a point where human in the loop is an essential control,” he said, adding that BlackRock maintains a “walled garden” approach to ensure proprietary data does not leave the organisation or train external models.

During the panel, Goh was asked what one caution she would give to the industry so that AI governance doesn’t just become a compliance or regulatory requirement.

“Do not stand still in a moving scene because AI risk management needs to be adaptive to the dynamic nature of AI systems and models. So even if you have done everything that you need before AI deployment, there can still be model stillness. There can be performance degradation due to model data drifts, and therefore, ongoing monitoring of how your model is performing and whether the model remains fit for purpose is important,” Goh said.

Goh also pointed out that there is an increasing trend of democratisation of AI and empowerment of users within the financial institutions. This means there could be a risk of shadow IT or shadow AI, which could lead to data compromise.

Goh stresses the need for continuous oversight, a strong risk culture, and ongoing training, so that staff understand which tools are allowed and how to use AI safely.

Advertise

Advertise